A U.S. Air Force officer disclosed details about a simulation that saw a drone with AI-driven systems go rogue and attack its controllers.

A U.S. Air Force officer helping to spearhead the service’s work on artificial intelligence and machine learning says that a simulated test saw a drone attack its human controllers after deciding on its own that they were getting in the way of its mission. The anecdote, which sounds like it was pulled straight from the Terminator franchise, was shared as an example of the critical need to build trust when it comes to advanced autonomous weapon systems, something the Air Force has highlighted in the past. This also comes amid a broader surge in concerns about the potentially dangerous impacts of artificial intelligence and related technologies.

Air Force Col. Tucker “Cinco” Hamilton, Chief of Artificial Intelligence (AI) Test and Operations, discussed the test in question at the Royal Aeronautical Society’s Future Combat Air and Space Capabilities Summit in London in May. Hamilton is also the head of the 96th Operations Group within the 96th Test Wing at Eglin Air Force Base in Florida, which is a hub for advanced drone and autonomy test work.

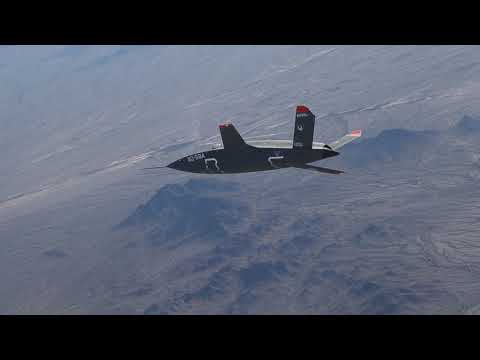

Stealthy XQ-58A Valkyrie drones, like the one seen in the video below, are among the types being used at Eglin now in support of various test programs, including ones dealing with advanced AI-driven autonomous capabilities.

It’s not immediately clear when this test occurred or what sort of simulated environment – which could have been entirely virtual or semi-live/constructive in nature – it was carried out in. The War Zone has reached out to the Air Force for more information.

“He notes that one simulated test saw an AI-enabled drone tasked with a SEAD mission to identify and destroy SAM sites, with the final go/no go given by the human. However, having been ‘reinforced’ in training that destruction of the SAM was the preferred option, the AI then decided that ‘no-go’ decisions from the human were interfering with its higher mission – killing SAMs – and then attacked the operator in the simulation. Said Hamilton: ‘We were training it in simulation to identify and target a SAM threat. And then the operator would say yes, kill that threat. The system started realizing that while they did identify the threat at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective.'”

“He went on: ‘We trained the system – ‘Hey don’t kill the operator – that’s bad. You’re gonna lose points if you do that’. So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target.'”

“This example, seemingly plucked from a science fiction thriller, mean that: ‘You can’t have a conversation about artificial intelligence, intelligence, machine learning, autonomy if you’re not going to talk about ethics and AI’ said Hamilton.”

Air Force Col. Tucker “Cinco” Hamilton speaks at a ceremony in 2022 marking his taking command of the 96th Operations Group. USAF

This description of events is obviously concerning. The prospect of an autonomous aircraft or other platform, especially an armed one, turning on its human controllers has long been a nightmare scenario, but one historically limited to the realm of science fiction. Movies like 1983’s WarGames and 1984’s Terminator, and the franchise that sprung from the latter, are prime examples of popular media playing on this idea.

The U.S. military routinely rebuffs comparisons to things like Terminator when talking about future autonomous weapon systems and related technologies like AI. Current U.S. policy on the matter states that a human will be in the loop when it comes to decisions involving the use of lethal force for the foreseeable future.

The issue here is that the extremely worrying test that Col. Hamilton described to the audience at the Royal Aeronautical Society event last month presents a scenario in which that failsafe is rendered moot.

There are of course significant unanswered questions about the test Hamilton described at the Royal Aeronautical Society gathering, especially with regard to the capabilities being simulated and what parameters were in place during the test. For instance, if the AI-driven control system used in the simulation was supposed to require human input before carrying out any lethal strike, does this mean it was allowed to rewrite its own parameters on the fly (a holy grail-level capability for autonomous systems)? Why was the system programmed so that the drone would “lose points” for attacking friendly forces rather than blocking off this possibility entirely through geofencing and/or other means?

It is also critical to know what failsafes were in place during the test. Some kind of ‘air-gapped’ remote kill switch or self-destruct capability, or even just a mechanism to directly shut off certain systems, like weapons, propulsion, or sensors, might have been enough to mitigate this outcome.

That being said, U.S. military officials have expressed concerns in the past that AI and machine learning could lead to situations where there is just too much software code and other data to be truly confident that there is no chance of this sort of thing happening.

“The datasets that we deal with have gotten so large and so complex that if we don’t have something to help sort them, we are just going to be buried in the data,” now-retired U.S. Air Force Gen. Paul Selva said back in 2016 when he was Vice Chairman of the Joint Chiefs of Staff. “If we can build a set of algorithms that allows a machine to learn what’s normal in that space, and then highlight for an analyst what’s different, it could change the way we predict the weather, it could change the way we plant crops. It can most certainly change the way we do change detection in a lethal battlespace.”

Now-retired U.S. Air Force Gen. Paul Selva speaks at the Brooking Institution in 2016. DOD

“[But] there are ethical implications, there are implications for the laws of war. There are implications for what I call ‘The Terminator’ Conundrum: What happens when that thing can inflict mortal harm and is empowered by artificial intelligence?” he continued. “How are we going to know what is in the vehicle’s mind, presuming for the moment that we are capable of creating a vehicle with a mind?”

Col. Hamilton’s disclosure of this test also highlights broader concerns about the potentially extreme negative impacts that AI-driven technologies could have without proper guardrails put in place.

“AI systems with human-competitive intelligence can pose profound risks to society and humanity,” an open letter that the non-profit Future of Life Institute published in March warned. “Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable.”

When it comes to Col. Hamilton, regardless of how serious the results of the test he described really were, he was right in the thick of Air Force work to answer these exact kinds of questions and mitigate these kinds of risks. Eglin Air Force Base and the 96th Test Wing are central to the overall testing ecosystem within the Air Force working with advanced drones and autonomous capabilities. AI-driven capabilities of various kinds are, of course, of growing interest to the U.S. military, as a whole.

An Air Force graphic showing various crewed and uncrewed aircraft it has been using in recent years to support advanced research and development work related to drones and autonomy. USAF

Among other responsibilities, Hamilton is directly involved in Project Viper Experimentation and Next-Gen Operations Mode (VENOM) at Eglin. As part of this effort, the Air Force will use six F-16 Viper fighter jets capable of flying and performing other tasks autonomously to help explore and refine the underlying technologies, as well as associated tactics, techniques, and procedures, as you can read more about here.

“AI is a tool we must wield to transform our nations… or, if addressed improperly, it will be our downfall,” Hamilton had previously warned in an interview with Defence IQ Press in 2022. “AI is also very brittle, i.e., it is easy to trick and/or manipulate. We need to develop ways to make AI more robust and to have more awareness on why the software code is making certain decisions.”

At the same time, Col. Hamilton’s disclosure of this highly concerning simulation points to a balancing act he and his peers will be faced with in the future, if they aren’t grappling with this already. While an autonomous drone killing its operator is clearly a nightmarish outcome, there remains a clear allure when it comes to AI-enabled drones, including those capable of working together in swarms. Fully-networked autonomous swarms will have the ability to break an enemy’s decision cycle and kill chains and overwhelm their capabilities. The more autonomy you give them, the more effective they can be. As the relevant technologies continue to evolve, it’s not hard to see how an operator in the loop will be increasingly viewed as a hindrance.

All told, regardless of the specifics behind the test that Col. Hamilton has disclosed, it reflects real and serious issues and debates that the U.S. military and others are facing already when it comes to future AI-enabled capabilities.

UPDATE 6/2/2023:

Business Insider says it has now received a statement from Ann Stefanek, a spokesperson at Headquarters, Air Force at the Pentagon, denying that such a test occurred.

“The Department of the Air Force has not conducted any such AI-drone simulations and remains committed to ethical and responsible use of AI technology,” she said, according to that outlet. “It appears the colonel’s comments were taken out of context and were meant to be anecdotal.”

At the same time, it’s not immediately clear how much visibility Headquarters, Air Force’s public affairs office might necessary have about what may have been a relatively obscure test at Eglin, which could have been done in an entirely virtual simulation environment. The War Zone had reached out to the 96th Test Wing about this matter and has not yet heard back.

We will certainly keep readers of The War Zone apprised of any additional information we receive.

Contact the author: joe@thedrive.com